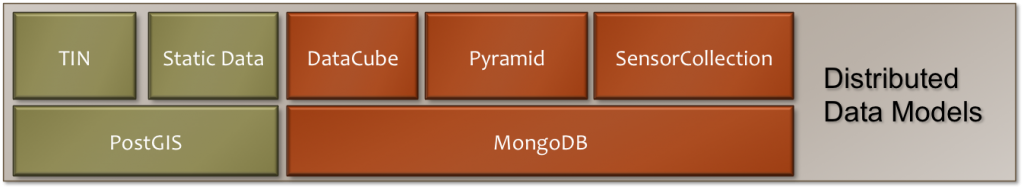

The Geoanalytics distributed data models are ready-to-go common data models that focus on handling the majority of common data organizations. They are also built to scale out to handle large data and streaming data not currently handled well by other packages.

DataCube

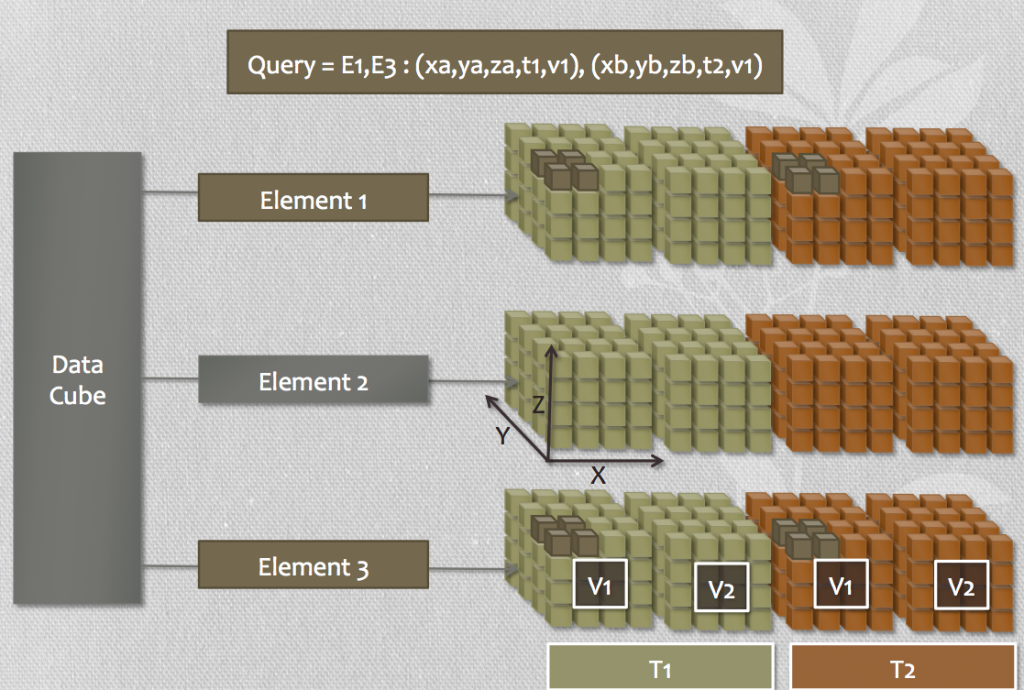

Store and query versioned, 4D regularly-gridded data.

If your data is in regularly gridded NetCDF or GRIB formats or stacks of GeoTIFFs, this will be your go-to model of choice. The DataCube handles timeseries of regular grids, allowing multiple versions to exist for the same time. At the level of the Python programmer, the DataCube allows you to select data into a regular Numpy array for manipulation with R, C, Fortran, or Scipy. At the web-service level, WMS and WCS can be used to give access to the underlying data or to a paletted map overlay service.

The DataCube groups logically related data elements into a single cube. Each element could be considered to be a column of a database or a “variable” in a NetCDF dataset. A user queries one or more layers for a given slice of x, y, z, and time and gets back either a single sample or an array.

Data can be reprojected in the query using PROJ.4, EPSG:SRID, or Well-Known-Text projection formats.

Additionally, the DataCube supports cube- and element-level key-value-pair metadata, which can be separately indexed and selected.

TIN (Irregular Grid)

Store and query versioned, irregularly gridded 4D data.

If you can’t use the DataCube, but you have a time series of consistently gridded data, then the TIN will work for you. By consistently gridded data, we mean data whose coordinates don’t change over time, but those data points may well be irregularly distributed in a triangular or polygonal network. This is the case for many environmental models. Resolution in these is often higher in certain places on the map than others. This is true for environmental models such as ADCIRC and SLOSH.

The TIN provides exactly the same services as the DataCube, but is optimized for handling irregularly but consistently gridded data over time.

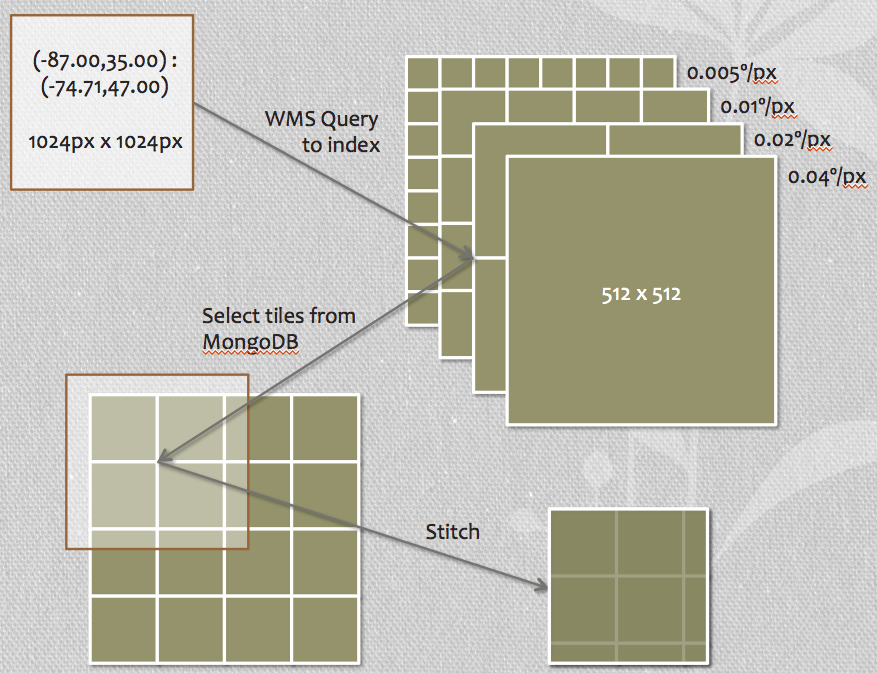

Pyramid

Store/query millions of tiles of imagery at multiple LODs as a set

The pyramid is meant for storing extremely large images with possibly millions of individual tiles per data-set. Geoanalytics image pyramid scales out by using sharded MongoDB collections to store large collections of tiles efficiently. Unlike many other tiling systems, Geoanalytics’ image pyramid can handle and serve 16 and 32 bit imagery through WMS or WCS.

Additionally, the Geoanalytics image pyramid handles timeseries, elevation, and versioning similarly to the DataCube. In fact, the Pyramid can be thought of as the DataCube “on steroids” since it can serve up datasets where individual slices of the dataset are far larger than the available physical memory.

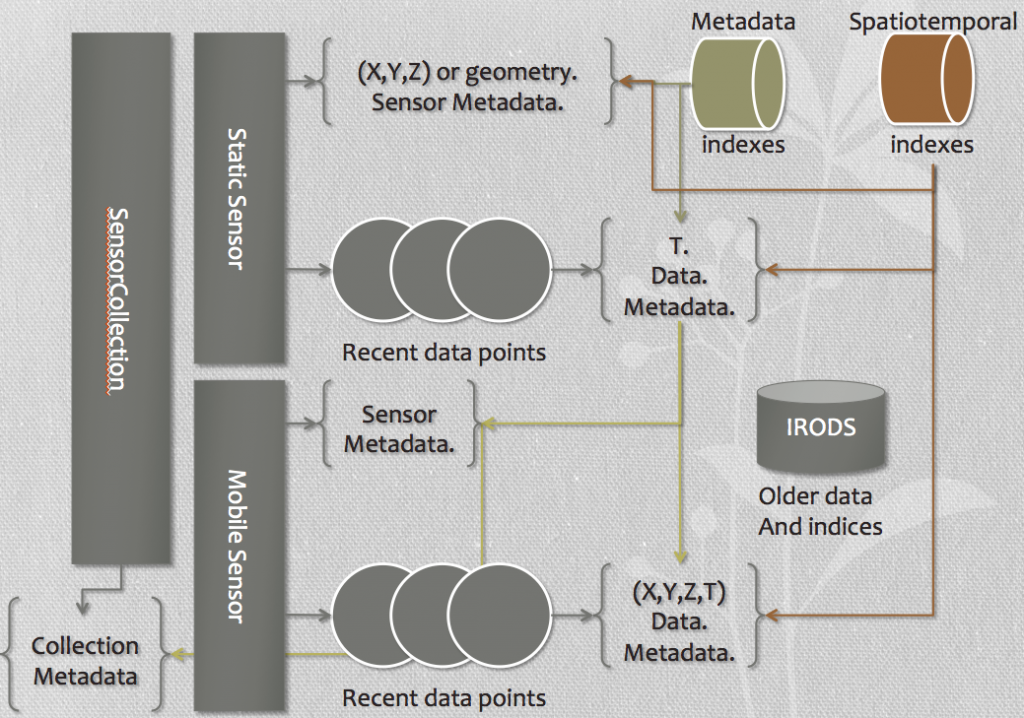

Sensor Collection

Store/query collections of streams of mobile phones or sensor data.

Use cases: Mobile device tracking, Ad-Hoc or regular sensor networks.

The sensor collection model is meant to encapsulate multiple sensor data models and provide the framework for collection, querying, and annotating the data in those models. The sensor collection exposes RESTful services for collecting data over HTTP(s). Optionally, it enables self-registration of sensors. Metadata can be collected and indexed at the sensor collection, sensor, and sensor data level through tags and KVP metadata. Server-side queries return dictionaries of numpy arrays. Client-side queries are filtered through WFS or custom services. SOS will be supported in the future. Sunsetting for old data is provided through IRODS and Celery to keep live data from becoming unmanageable.

Custom models for static data

Store/query static raster / vector datasets.

Use case: Data with complex query patterns, or traditional structured database tables with vector or attached raster data.

- GeoDjango ORM for appserver / PostGIS integration.

- MongoDB for fields for irregular objects.

- PostGIS and MongoDB integration, allowing ForeignKey relations between Mongo documents and PostGIS records.

- MongoFS for large or large numbers of raster files.

- Serve any GeoDjango model through OWS.

- Build custom services on top of GeoDjango models.